Inside Meta’s Cloud Deal with Google for AI Infrastructure

Updated: Aug 23, 2025 • Estimated read: 18–22 minutes • AI-generated images included

Tech ke sabse bade rivals bhi kabhi‑kabhi practical partners ban jaate hain. Meta (Facebook, Instagram, WhatsApp ki parent) aur Google Cloud ka AI infrastructure partnership isi trend ko validate karta hai. Yeh article simple language me explain karta hai ki yeh deal kyun important hai, likely architecture kya ho sakti hai, aur developers, advertisers, aur users ke liye iska kya matlab banta hai. Saath hi multicloud best practices, risks, aur FAQs bhi covered hain.

Table of Contents

- Key Takeaways

- Introduction

- Why Meta Needs Cloud for AI Now

- What We Know (and Don’t) About the Deal

- How Google Cloud’s AI Stack Fits Meta’s Needs

- Inside the Architecture

- Why Google Cloud? Strategic Reasons

- What Meta Gains—Beyond Compute

- What Google Cloud Gains

- Implications for the AI Ecosystem

- Risks and Challenges

- Product Impact

- Practical Multicloud AI Playbook

- Costs and FinOps

- Security & Data Governance

- Sustainability

- Regulatory & Residency

- Minimizing Vendor Lock‑in

- Timeline

- KPIs & Success Metrics

- FAQs

- Conclusion

Key Takeaways

- Scale + Speed + Flexibility: Deal Meta ke in‑house infra ko complement karti hai; cloud burst capacity aur regional inference provide karta hai.

- Multicloud Pattern: Steady/sensitive on‑prem, burst training + low‑latency serving cloud me.

- Accelerated Compute: NVIDIA GPUs (possible TPUs), fast networking, global regions, mature security.

- Mutual Benefit: Meta = capacity/resilience; Google Cloud = marquee AI customer + platform learnings.

- Industry Norm: Hyperscalers mix on‑prem + cloud to meet AI demand and hedge supply risk.

Introduction

AI race me speed king hai. Foundation models, recommendation engines, aur safety pipelines par rapid iteration ke liye elastic compute, high‑speed networks, aur disciplined MLOps chahiye. Meta ka Google Cloud ke saath partnership rivalry se zyada pragmatism hai: additional capacity lena, risk diversify karna, aur features faster ship karna.

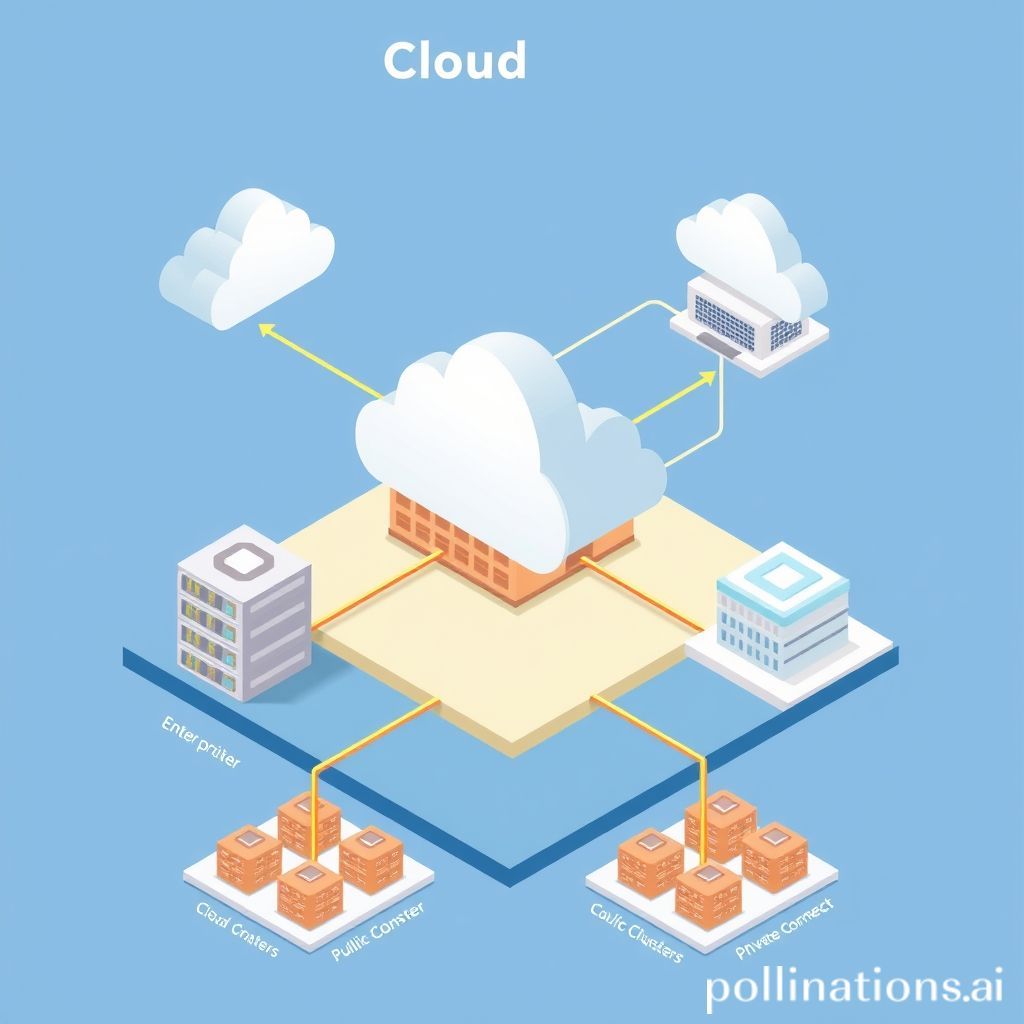

AI image: Hybrid cloud—enterprise DCs connected to public cloud GPUs via private interconnect.

Why Meta Needs Cloud for AI Now

The AI Arms Race Needs Massive Compute

LLMs aur advanced recommender systems ko tens of thousands of accelerators, ultra‑fast fabrics, aur scalable storage chahiye. Meta already huge infra run karta hai, par peak spikes (product launches, new model families) cloud ki elasticity maangti hain.

- Accelerators: NVIDIA H100‑class (possible TPU roles)

- Networking: Low‑latency all‑reduce/parameter sharding fabrics

- Storage: Datasets, checkpoints, logs at scale

- Orchestration: Thousands of nodes, resilient scheduling

Training vs Inference

- Training: Bursty, compute‑hungry; demand days/weeks ke liye spike karta hai.

- Inference: Always‑on, latency‑sensitive; billions of requests, multi‑region presence.

Cloud burst training handle karta hai aur inference ko users ke close laa kar latency aur resilience improve karta hai.

AI image: Global cloud regions enable low-latency inference and residency controls.

Multicloud = Default

Single-provider dependency risky hai. Multicloud redundancy, price leverage, hardware diversity, aur regulatory flexibility deta hai.

What We Know (and Don’t)

Common Themes

- Large‑scale AI workloads, multiple regions, accelerated compute access.

- Meta ko speed & capacity; Google Cloud ko marquee AI customer.

Typical Hyperscale AI Deal Ingredients

- Compute: NVIDIA GPUs; select cases me TPUs.

- Networking: Private interconnects, low‑latency peering.

- Storage: Object + high‑perf file/block options.

- Orchestration: Kubernetes (GKE), PyTorch Distributed.

- Security: VPC isolation, IAM, encryption, audit logs.

- Commercials: Commit discounts, SLAs, volume tiers.

- Sustainability: Carbon‑aware placement and reporting.

How Google Cloud’s AI Stack Fits

Compute: NVIDIA GPUs, Possibly TPUs

PyTorch ecosystem maturity ki wajah se LLM training zyada tar NVIDIA GPUs par hota hai. Google Cloud high‑bandwidth GPU clusters aur GKE scheduling deta hai. TPUs TensorFlow/JAX workloads me strong hain—select inference/training cases me cost/perf fit ho sakta hai.

AI image: GPUs remain default for PyTorch LLMs; TPUs shine in targeted use cases.

Storage & Data Services

Targeted cloud storage use: curated training sets, checkpoints, model artifacts—wholesale migration nahi. Focus: performance, governance, reproducibility.

Networking & Interconnect

Private interconnects predictable throughput aur low jitter dete hain; region strategy data residency aur latency ko balance karti hai.

MLOps: Managed vs Self‑Managed

Blended approach: managed primitives (registries/logging) + self‑directed orchestration (GKE, PyTorch Distributed) for control and portability.

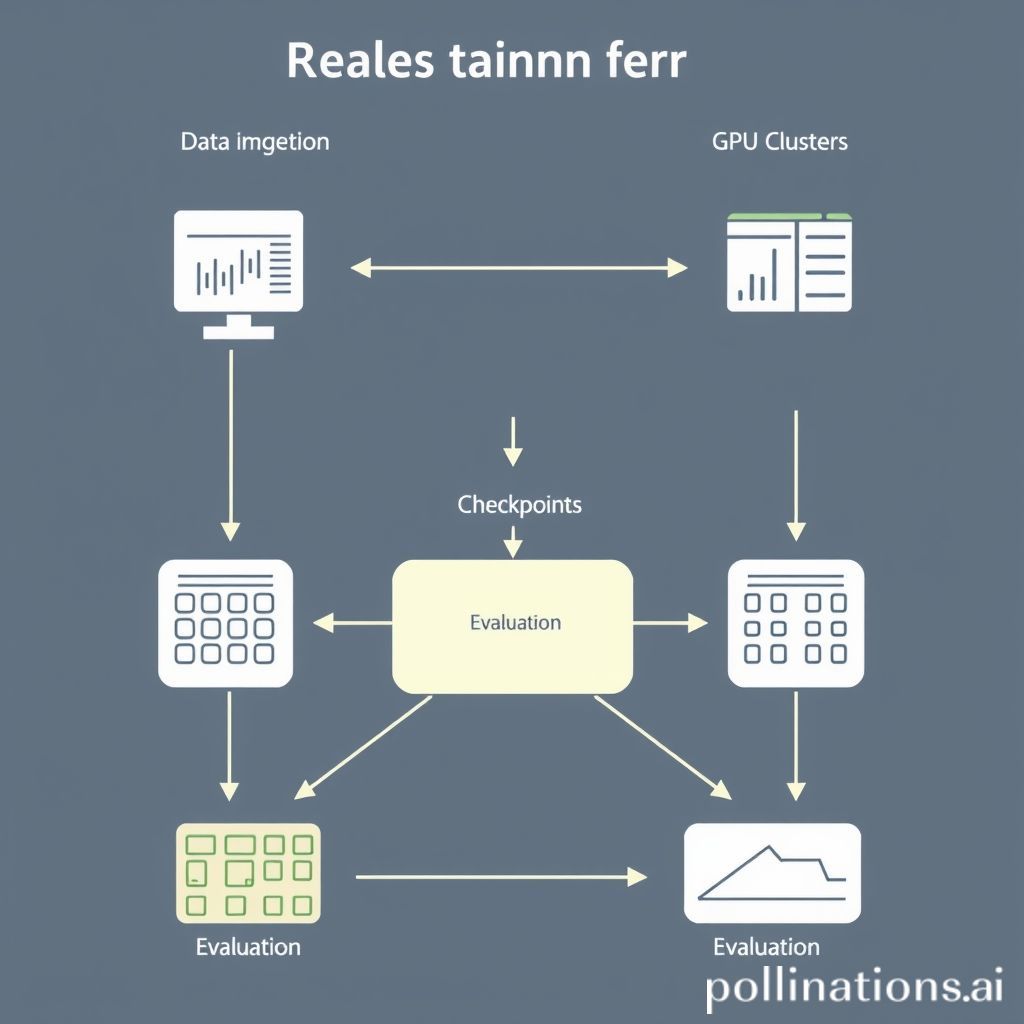

Inside the Architecture

1) Data Preparation

- Filtering, deduplication, safety checks; dataset versioning.

- Approved slices to cloud object storage; encryption + short‑lived credentials.

2) Training at Scale

- GKE‑scheduled GPU jobs; PyTorch Distributed + mixed precision.

- Checkpoint replication; spot for experiments, reserved for critical runs.

- Topology‑aware scheduling, gradient checkpointing, fused kernels.

3) Evaluation & Safety

- Held‑out evals, adversarial probes, bias audits.

- Promotion gates based on quality + safety thresholds.

4) Inference & Serving

- Multi‑region endpoints; autoscale by QPS + latency SLOs.

- Quantization, batching, caching to cut cost.

- Canary rollouts; policy and moderation integration.

5) Observability & Cost

- Unified metrics, tracing, logs across train/serve.

- Budgets/alerts; per‑project chargeback dashboards.

- Error budgets; blameless postmortems; shared runbooks.

AI image: ingestion → GPU clusters → checkpoints → eval → serving.

Why Google Cloud? Strategic Reasons

- Capacity & Availability: New chips, ready clusters, multiple regions.

- Cost Predictability: Committed‑use discounts; price/perf balance.

- Global Reach: Low‑latency regional inference; residency options.

- Sustainability: Higher renewables + efficient cooling.

What Meta Gains—Beyond Compute

- Faster experiments and rollouts; no procurement wait.

- Flexibility without lock‑in; core IP under tight control.

- Diversified risk; failover paths; DR for artifacts.

What Google Cloud Gains

- Revenue + credibility in accelerated computing.

- Platform learnings (scheduling, autoscaling, networking).

- Ecosystem halo: Kubernetes + PyTorch at scale.

Implications for the AI Ecosystem

Open‑Source LLMs Get a Lift

Llama models ko better deployment paths, inference optimizations, aur safety tooling milte hain—startups/enterprises ke liye flexible options.

Developers on Google Cloud

- Optimized PyTorch images & templates.

- Reference architectures (RLHF, eval, guardrails).

- Better cost controls for long runs.

Competitive Landscape

- AWS: Deep NVIDIA partnership + enterprise scale.

- Azure: OpenAI alignment + enterprise tooling.

- Google Cloud: TPUs, analytics, research depth + growing GPU fleets.

Risks & Challenges

Data Privacy & Compliance

- Strict classification, anonymization, region controls.

- Audits, logs, and certifications for assurance.

Integration Complexity

- Consistent tooling across on‑prem and cloud.

- Network/security policies; artifact versioning.

Performance vs Cost

- Prevent idle GPUs; right‑size clusters.

- Optimize training loops; checkpoint pruning.

Operational Alignment

- Joint incident response; clear escalation paths.

- Shared SLOs and runbooks.

AI image: Security by design—encryption, isolation, auditability.

Product Impact

- Smarter assistants in messaging (better reasoning, multimodal).

- Enhanced creative tools (image/video generation, editing).

- More relevant feeds and ads (faster iteration on ranking models).

- Better safety/integrity via scalable inference.

Practical Multicloud AI Playbook

- Decide What Runs Where: On‑prem steady/sensitive; cloud bursts & regional serving.

- Standardize Stack: Containers, Kubernetes, PyTorch; version all artifacts.

- Govern Data: Classify, encrypt, isolate (VPC); audit access.

- Optimize Training: Mixed precision, gradient checkpointing, topology‑aware scheduling.

- Serve Smart: Quantize, batch, autoscale, multi‑region endpoints.

- Reliability by Design: Canary, circuit breakers, SLOs, postmortems.

- FinOps Discipline: Tags, budgets, alerts, unit costs ($/train hr, $/1k inferences).

- Model Governance: Registry, lineage, eval gates, documented limits.

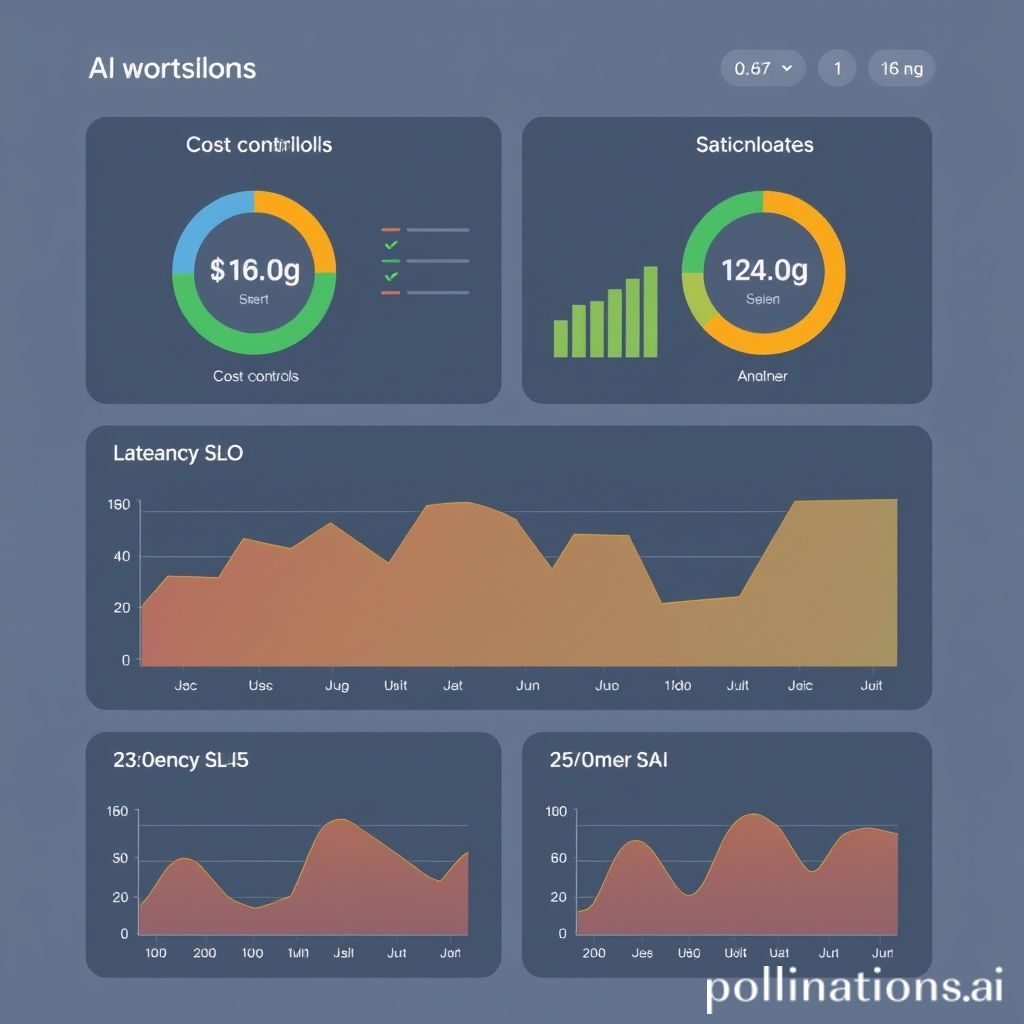

AI image: utilization, latency SLOs, and cost controls in one view.

Costs & FinOps

AI spend control ke liye capacity ko product ki tarah treat karein: instrument, forecast, iterate. Key levers:

- GPU hours: Mixed precision, kernel fusion → 15–30% faster training.

- Utilization: Topology‑aware scheduling, packed jobs → 10–20% higher.

- Serving cost: Quantization, batching, caching → 20–50% lower $/1k requests.

- Storage: Checkpoint pruning, lifecycle rules → 25–60% savings.

- Commit discounts: Rightsize terms; avoid over‑commit.

Security & Data Governance

- Segmentation: Separate projects/tenants; perimeters for data exfil control.

- Encryption: At rest + in transit; consider confidential compute.

- Identity: Short‑lived creds, least‑privilege IAM, workload identity federation.

- Auditability: Centralized logs; tamper‑evident storage.

- Residency: Region pinning; DLP for PII; allowlists for datasets.

- Model governance: Lineage, eval gates, documented use constraints.

Sustainability

Greener regions + advanced cooling → lower carbon footprint. Carbon‑aware scheduling and model efficiency (distillation, sparsity) long‑term impact deti hain.

AI image: choose greener regions; time‑shift non‑urgent jobs.

Regulatory & Data Residency

- Pin sensitive data to compliant regions; restrict egress.

- Map data flows + processing purposes; maintain audit trails.

- Use DLP; consider differential privacy where feasible.

Minimizing Vendor Lock‑in

- Open tooling (Kubernetes, PyTorch); portable artifacts.

- Abstract provider differences behind internal APIs.

- Provider‑agnostic CI/CD, registries, and model governance.

Timeline

0–6 Months

- Capacity onboarding, pilots, benchmarking.

- Burst training; limited regional serving.

6–18 Months

- Full training cycles; multi‑region production.

- Automated cost controls; expanded safety pipelines.

18+ Months

- Deeper on‑prem scheduler integration; unified data buses.

- Diverse accelerators under one operating model.

KPIs & Success Metrics

- $ per training hour (by model family/version)

- $ per 1k inferences (compute + network + storage)

- GPU utilization and latency SLO attainment

- Change failure rate after deployments

- Carbon intensity (gCO₂e per train hour/1k inferences)

FAQs

Q: Kya Meta apna saara AI Google Cloud par shift kar raha hai?

A: Nahi. Yeh partnership Meta ke in‑house infra ko complement karti hai—cloud burst capacity aur regional serving ke liye.

Q: Kya Meta TPUs use karega ya sirf NVIDIA GPUs?

A: Aaj LLM training ka core NVIDIA GPUs + PyTorch par hota hai. TPUs select workloads me cost/perf ke liye consider ho sakte hain.

Q: Kya Llama models Google Cloud par exclusive ho jaayenge?

A: Nahi. Llama open ecosystem me available hai (Meta license terms ke under) aur multiple platforms par chal sakta hai.

Q: Privacy kaise handle hoti hai?

A: Strict classification, encryption, isolation, audit logs; sensitive data defined perimeters/regions me hi rehta hai.

Q: Kya isse AI costs kam hongi?

A: Predictability aur efficiency improve hoti hai (commit discounts, better utilization). Par AI at scale inherently expensive hota hai.

Q: Kya yeh AWS/Azure ke liye negative hai?

A: Zaroori nahi. Market expand ho raha hai; large companies multicloud adopt kar rahi hain.

Conclusion

Meta–Google Cloud AI deal kisi ek winner ko choose karne ke baare me nahi—yeh speed, flexibility, aur resilience ke baare me hai. Multicloud pragmatism + portable tooling + strong governance ka mix aaj ki AI reality hai. Jo teams is infra edge ko useful, safe, aur delightful experiences me convert karengi—wo next wave define karengi.

AI image: Collaboration over competition—cloud partnership for AI scale.

Images: AI-generated via prompt-based generation (no logos/text). Safe for illustrative use.

0 Comments