AI Regulations and Ethics: How Countries Are Shaping the Future of Artificial Intelligence

Explore how nations worldwide are creating laws and ethical frameworks for AI. Discover global trends, real-world examples, and the future of responsible artificial intelligence.

Table of Contents

- Introduction

- Why AI Regulation and Ethics Matter

- Global Overview: How Countries Approach AI Regulation

- The European Union: Leading the Way with the AI Act

- United States: Innovation vs. Regulation

- China: State-Led AI and Social Governance

- India: Balancing Innovation and Inclusion

- Other Countries: Canada, UK, Japan, and Beyond

- Key Ethical Issues in AI

- Real-World Examples of AI Regulation and Ethics

- Challenges and Controversies

- The Future of AI Regulation and Ethics

- Conclusion

Introduction

Artificial intelligence is transforming every aspect of our lives—from healthcare and finance to transportation and entertainment. But as AI systems become more powerful and widespread, questions about their safety, fairness, and accountability are growing louder. Around the world, governments, organizations, and citizens are asking: How should we regulate AI? What ethical principles should guide its development and use? In this article, we explore how countries are shaping the future of AI through new laws, policies, and ethical frameworks.

Why AI Regulation and Ethics Matter

AI is not just another technology—it’s a force that can amplify both the best and worst of human society. Unregulated AI can lead to biased decisions, privacy violations, job displacement, and even threats to democracy. On the other hand, thoughtful regulation and ethical design can ensure that AI benefits everyone, protects human rights, and builds public trust.

- Safety: Preventing accidents, misuse, and unintended consequences of AI systems.

- Fairness: Ensuring AI does not discriminate based on race, gender, or other factors.

- Transparency: Making AI decisions understandable and explainable.

- Accountability: Defining who is responsible when AI systems cause harm.

- Innovation: Balancing regulation with the need to foster research and economic growth.

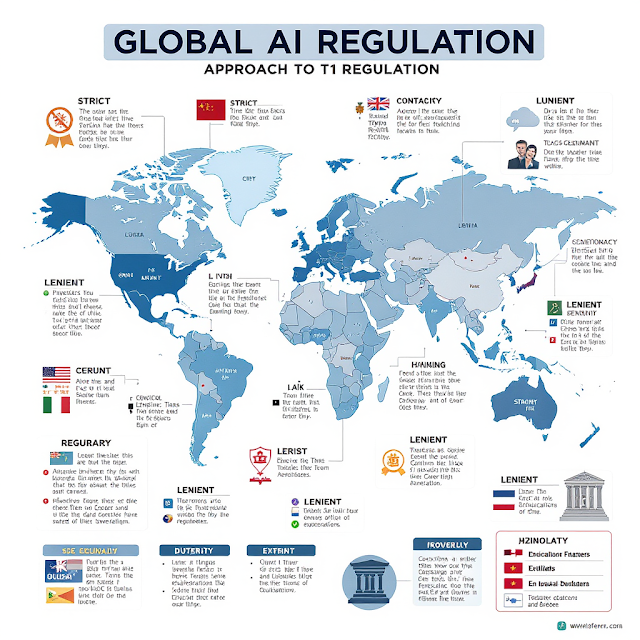

Global Overview: How Countries Approach AI Regulation

There is no single approach to AI regulation. Some countries are moving quickly to set strict rules, while others prefer flexible guidelines or self-regulation. The result is a patchwork of laws and standards that reflect different cultures, values, and political systems.

- Europe: Focuses on human rights, privacy, and risk-based regulation.

- USA: Prioritizes innovation and market-driven solutions, with sector-specific rules.

- China: Emphasizes state control, social stability, and technological leadership.

- India: Seeks to balance innovation, inclusion, and ethical use.

- Other countries: Canada, UK, Japan, and others are developing their own frameworks, often inspired by global best practices.

The European Union: Leading the Way with the AI Act

The European Union is widely seen as a global leader in AI regulation. In 2021, the EU proposed the Artificial Intelligence Act—the world’s first comprehensive legal framework for AI. The Act classifies AI systems by risk (unacceptable, high, limited, minimal) and sets strict requirements for high-risk applications, such as facial recognition, critical infrastructure, and hiring tools.

- Key Features:

- Ban on certain uses of AI (e.g., social scoring, real-time biometric surveillance in public spaces).

- Mandatory transparency and human oversight for high-risk AI.

- Heavy fines for non-compliance (up to 6% of global turnover).

- Impact: The EU AI Act is influencing policy debates worldwide, as other countries look to Europe for guidance on responsible AI.

United States: Innovation vs. Regulation

The United States is home to many of the world’s leading AI companies and research labs. The US approach to AI regulation is more decentralized, with a focus on innovation and economic competitiveness. Instead of a single national law, the US relies on a mix of sector-specific rules (e.g., for healthcare, finance, and transportation) and voluntary guidelines.

- Key Developments:

- The White House released the “Blueprint for an AI Bill of Rights” in 2022, outlining principles for safe and ethical AI.

- Federal agencies like the FDA and FTC are developing AI-specific rules for their sectors.

- States like California and Illinois have passed their own AI and data privacy laws.

- Debate: Some experts worry that the US is falling behind in setting global AI standards, while others argue that too much regulation could stifle innovation.

China: State-Led AI and Social Governance

China has made AI a national priority, investing billions in research and development. The Chinese government takes a top-down approach to AI regulation, combining strict state control with ambitious goals for technological leadership. China’s AI policies emphasize social stability, security, and economic growth.

- Key Policies:

- Guidelines for “next-generation AI governance” focused on safety, fairness, and controllability.

- Rules for deepfakes, recommendation algorithms, and facial recognition.

- Social credit systems and surveillance technologies raise global concerns about privacy and human rights.

- Global Impact: China’s approach is influencing other countries, especially in Asia and Africa, that see AI as a tool for development and social management.

India: Balancing Innovation and Inclusion

India is one of the world’s fastest-growing digital economies, with a vibrant AI startup scene and a huge population of young learners. The Indian government is working to create an AI policy that balances innovation, economic growth, and social inclusion.

- Key Initiatives:

- National Strategy for Artificial Intelligence (“AI for All”) focuses on healthcare, agriculture, education, and smart cities.

- Draft guidelines for responsible AI, including fairness, transparency, and accountability.

- Emphasis on using AI to bridge social and economic divides, not widen them.

- Challenges: India faces unique challenges, such as digital literacy, data privacy, and the need to protect jobs in a rapidly changing economy.

Other Countries: Canada, UK, Japan, and Beyond

Many other countries are developing their own AI regulations and ethical guidelines, often inspired by international organizations like the OECD and UNESCO.

- Canada: One of the first countries to release a national AI strategy, with a focus on responsible innovation and public trust.

- United Kingdom: The UK’s “AI Roadmap” emphasizes pro-innovation regulation, public engagement, and global leadership in AI safety.

- Japan: Promotes “Society 5.0”—a vision of a human-centered, AI-powered society with strong ethical standards.

- Brazil, South Korea, Australia, UAE: Each country is experimenting with its own mix of laws, incentives, and ethical codes.

International cooperation is growing, with countries working together on cross-border issues like data sharing, cybersecurity, and AI in warfare.

Key Ethical Issues in AI

AI raises complex ethical questions that go beyond technology. Here are some of the most important issues being debated worldwide:

- Bias and Discrimination: AI systems can reflect and amplify existing social biases, leading to unfair outcomes in hiring, policing, lending, and more.

- Privacy: AI often relies on massive amounts of personal data, raising concerns about surveillance and consent.

- Transparency and Explainability: Many AI models are “black boxes”—it’s hard to understand how they make decisions.

- Autonomy and Control: Who is responsible when AI systems act independently or make mistakes?

- Human Dignity: How do we ensure that AI respects human rights and does not undermine our sense of agency?

- Job Displacement: Automation powered by AI could replace millions of jobs, creating social and economic challenges.

Real-World Examples of AI Regulation and Ethics

- Facial Recognition Bans (USA & Europe): Cities like San Francisco and Boston have banned government use of facial recognition due to privacy and bias concerns. The EU AI Act also restricts real-time biometric surveillance.

- AI in Hiring (Illinois, USA): Illinois passed the “AI Video Interview Act,” requiring companies to inform applicants when AI is used in job interviews and to protect their data.

- AI in Healthcare (UK & India): The UK’s NHS and India’s health ministry are developing guidelines for safe, ethical use of AI in diagnostics and patient care, focusing on transparency and patient consent.

- Data Privacy Laws (GDPR, CCPA): The EU’s GDPR and California’s CCPA set strict rules for data collection, use, and consent—impacting how AI companies operate worldwide.

Challenges and Controversies

Regulating AI is not easy. Technology moves faster than the law, and there are many open questions:

- Global Coordination: How can countries align their rules to avoid “AI havens” with weak oversight?

- Enforcement: How do we monitor and enforce compliance, especially for complex or open-source AI systems?

- Innovation vs. Regulation: How do we protect society without stifling research and economic growth?

- Ethics in Practice: How do we turn high-level principles into real-world action and accountability?

There are also concerns about “ethics washing”—when companies or governments use ethical language to avoid real change.

The Future of AI Regulation and Ethics

The future of AI regulation and ethics will be shaped by ongoing debates, new technologies, and global events. Here are some trends to watch:

- AI for Good: More countries and companies are adopting “AI for good” principles, using technology to address social and environmental challenges.

- International Standards: Organizations like the OECD, UNESCO, and the G7 are working on global AI standards and best practices.

- Public Engagement: Citizens, civil society, and marginalized groups are demanding a voice in how AI is governed.

- AI and Democracy: The rise of deepfakes, algorithmic manipulation, and surveillance is forcing new conversations about democracy and human rights.

- AI and the Global South: Developing countries are seeking to shape AI policy in ways that reflect their own needs and values, not just those of the US, China, or Europe.

Ultimately, the goal is to create a future where AI is safe, fair, and beneficial for all. This will require ongoing collaboration between governments, companies, researchers, and the public.

Conclusion

AI is one of the most powerful forces shaping our world today. As countries race to harness its potential, they must also confront its risks and challenges. The choices we make now—about regulation, ethics, and global cooperation—will determine whether AI becomes a tool for progress or a source of harm. By working together, we can build a future where artificial intelligence serves humanity, respects our rights, and reflects our highest values.

How do you think your country should regulate AI? Share your thoughts and join the conversation below!

Keywords: AI regulations, AI ethics, artificial intelligence law, global AI policy, AI governance, responsible AI, AI act, AI and society, AI compliance, AI future

0 Comments